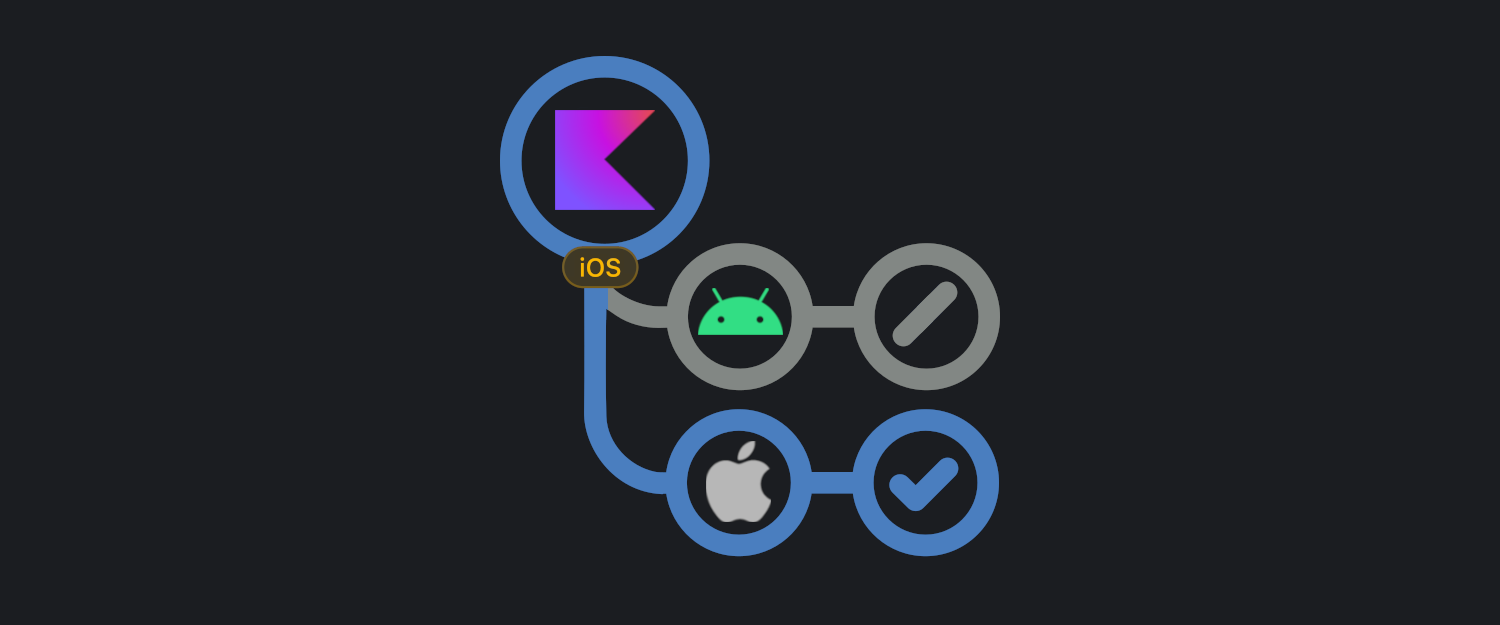

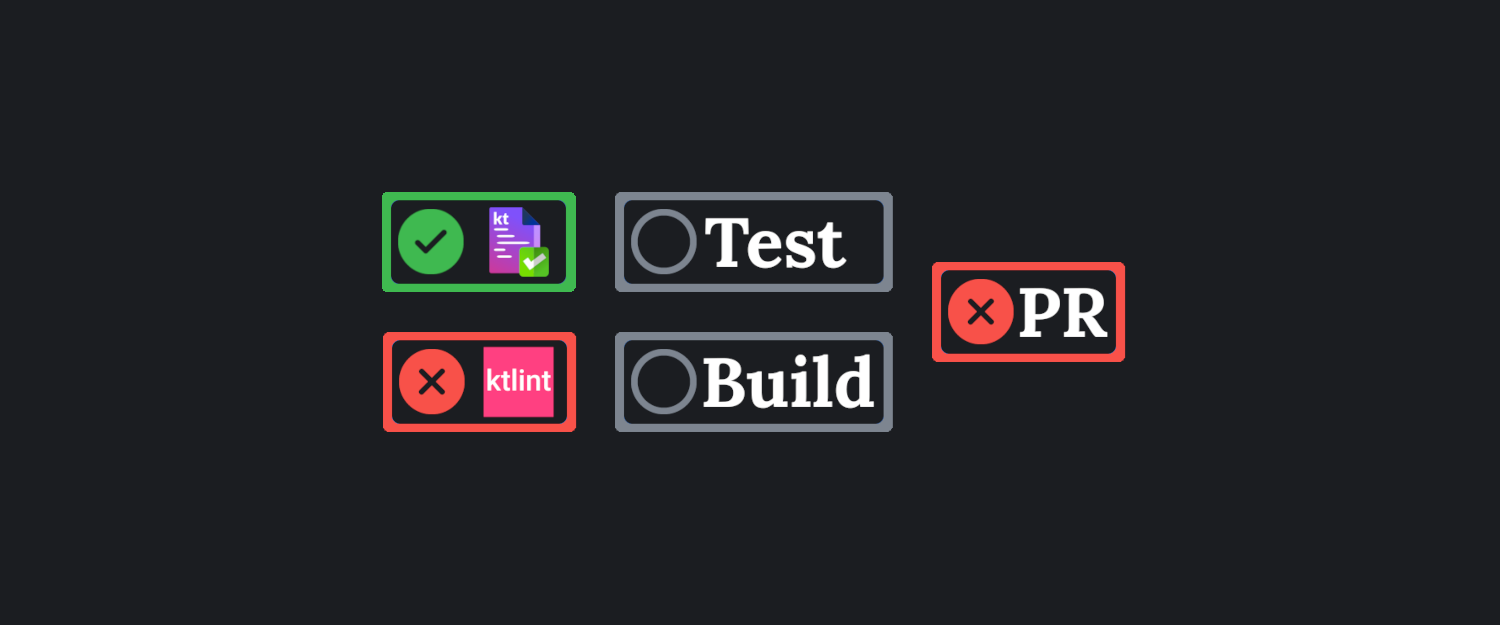

Testing is an important part of software development, a good automated test suite can catch bugs in a matter of minutes. Automated tests are usually run as part of a Continuous Integration, which runs on a separate machine without much involvement of the developer.

Comparing that with manual testing, which usually: builds the whole app, requires clicking through it, sometimes even changing network responses to test edge cases (e.g. errors, missing data). All those manual steps performed through the day add up pretty quickly (not to mention the whole lifetime of the project) costing developers a lot of time which could be spent elsewhere.

However, to have a good automated test suite, it needs to cover all edge cases for the important parts of the code. Important code could be just complex logic which everyone is scared to touch in fear of breaking it. It might also be code which is often changed, and with a little bit of oversight might introduce regressions (i.e. bugs).

Unfortunately, having a good test suite isn't easy, every developer must be diligent and add tests for every logic condition. When the Author forgets to do so, then a Code Reviewer should catch this and raise the missing test cases.

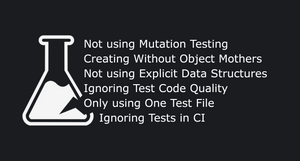

The good news is, that Mutation Testing is an approach which allows for quickly catching missing test cases.

Automated testing is important for efficient software development, catching bugs within minutes and saving valuable developer time. To ensure a robust test suite, diligence is required from every developer.

What's mutation testing

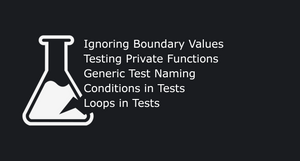

In simple terms, Mutation Testing involves changing the production code and then running the tests. If there's a failure after this change, then usually this condition is covered by tests*. However, if all tests pass, then most likely there is a missing test case for this condition.

* Sometimes even if some test fails after the "mutation" it's good to double-check if the test fails for the correct reasons. For example, the test focuses on some other part of the production code, but fails as a side effect of this mutation.

Boundary values example

Take a look at this example production code:

enum class Priority {

LOW,

HIGH,

}

fun convertToPriority(value: Int): Priority? {

if (value !in 0..100) return null

return if (value <= 100 && value > 50) {

Priority.HIGH

} else {

Priority.LOW

}

}The logic could be simplified, but I wanted to keep it this way to simulate logic with a lot of conditions which might be tricky to test. There are some bugs hidden in the above code, which the current test suite does not detect:

@Test

fun `When value is -1 then null is returned`() {

convertToPriority(-1) shouldBe null

}

@Test

fun `When value is 101 then null is returned`() {

convertToPriority(101) shouldBe null

}

@Test

fun `When value is 51 then High priority is returned`() {

convertToPriority(51) shouldBe Priority.HIGH

}

@Test

fun `When value is 50 then Low priority is returned`() {

convertToPriority(50) shouldBe Priority.LOW

}Looking at the test cases above, it might seem that everything is covered, however when it comes to boundary values it's really important to test all of them. In the function above, the boundary values are 100, 50 and 0.

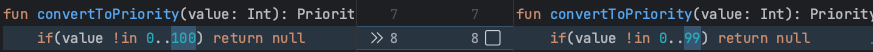

To catch the missing test cases, it's as easy as changing the upper boundary value to something smaller, like 99:

The production code treats 100 as a High priority, so that's probably the requirement. However, after this change, all tests still pass, meaning that there's a missing test case for checking it:

@Test

fun `When value is 100 then High priority is returned`() {

convertToPriority(100) shouldBe Priority.HIGH

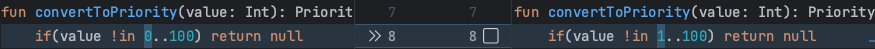

}Another missing test case is for the lower boundary in the first condition:

All tests still pass, so the following test needs to be added:

@Test

fun `When value is 0 then Low priority is returned`() {

convertToPriority(0) shouldBe Priority.LOW

}Mutation testing Cheat Sheet

Every condition like ifs, filters or anything which uses a boolean can be easily mutated by:

- Commenting it out altogether (removing a condition branch)

- Changing boundary values in comparisons (like in the example above)

- Negating the condition (e.g. using !, .not(), or switching from any to all)

Other code mutations could be returning a hard-coded value intentionally (e.g. an empty list, error or null)

Mutation Testing validates test suites by altering production code logic. When tests pass despite code mutations, it signals missing test cases. Special attention should be paid to boundary values and conditions.

Why Code Coverage doesn't tell the whole truth

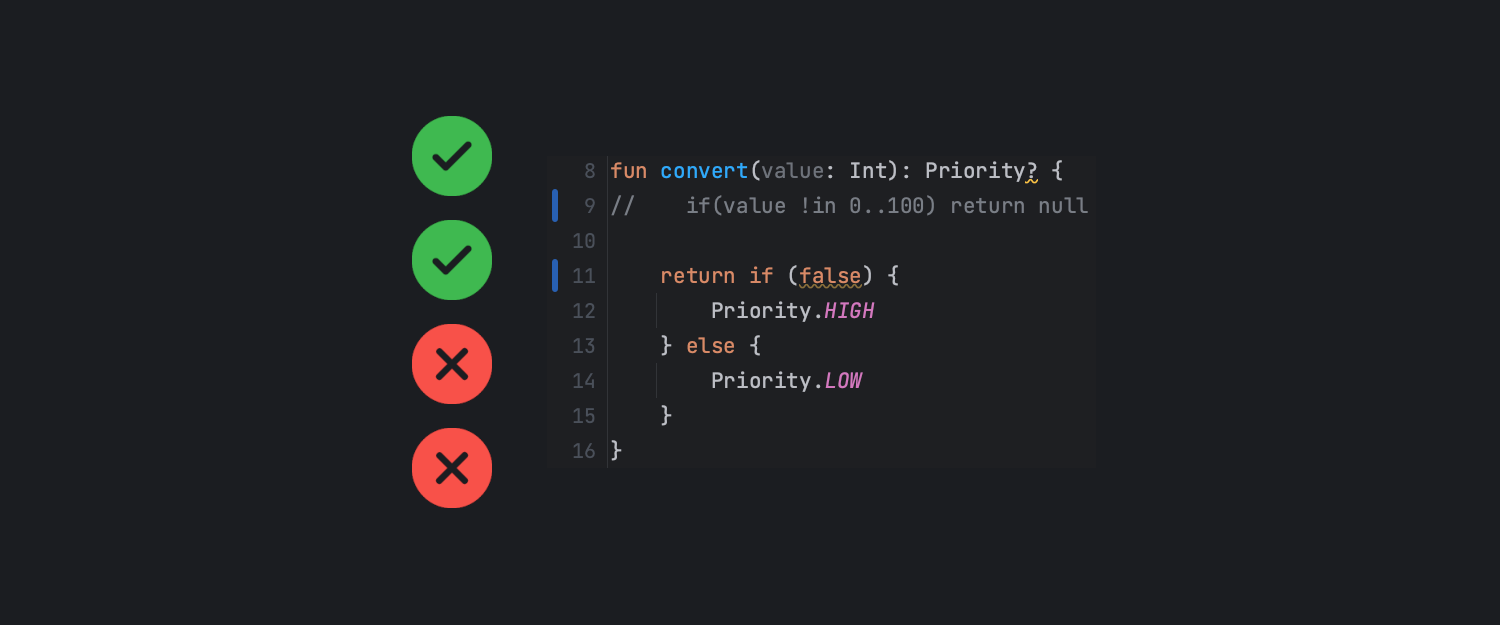

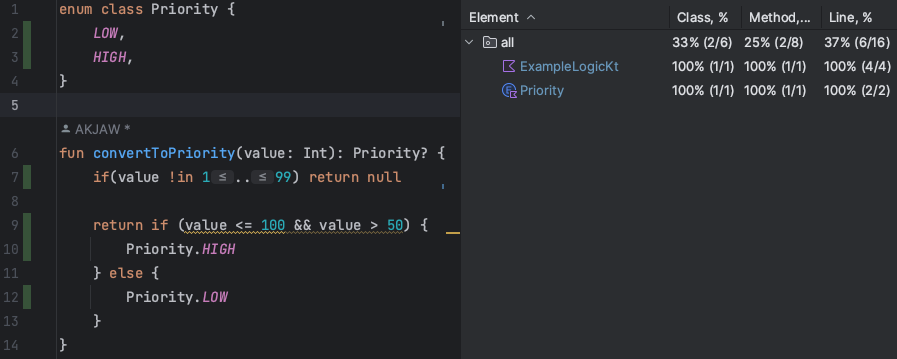

Code coverage is a misleading metric, look at this example:

Looking at the above result it seems that everything is covered, however as previously pointed out, the above code has issues with the boundary values. So even though every line is covered, the test suite is not complete and is missing some cases.

Coverage could be used for just checking untested lines, however, for complex conditions it can be misleading and give false confidence that every case is covered.

Code Coverage cannot be used as a metric for how good a test suite actually is. It gives misleading confidence, which makes it easier to introduce bugs into productions.

Writing Code

When I'm writing code, most of the time I'm using Test Driven Development, which in practice should mean that there is no production code that doesn't have a corresponding test.

There are cases where I don't use TDD, or that the logic is so complex that I wasn't able to figure out all test cases for it. When that happens, Mutation Testing comes in handy, because it quickly allows me to find missing cases.

Reviewing Code

When reviewing code which has tests, I usually open both the production code and the tests side by side in a split view. I first read through the test cases and try to understand the requirements of the class.

After I'm done checking the existing code, I try to find any missing cases through mutation testing. Thanks to this, I don't need to spend a lot of time scanning through all test cases and checking the corresponding production code.

Besides finding missing test cases, mutation testing can also find tests which are passing for the wrong reason. For example, the production code uses condition A and B. The test verifies that when condition A is met, it passes. However, through mutation testing, condition A is removed but the test still passes. This means that the test passes for the wrong reason and needs to be changed.

Summary

To ensure that something is properly tested you first have to fully understand the code, mutation testing is kind of shortcut for this. The rule of thumb is that, if the changed code doesn't cause the test to fail, then some test case is missing.

This technique is helpful for driving good test coverage, it doesn't need to be used every time. There are less important classes which don't need to have perfect test coverage.

The main focus for using mutation testing should be on code which:

- Is changed a lot (because changes can introduce regressions, and automated tests help with catching them)

- Is critical for the functionality of the software (having tests for stuff which is only used for development might be overkill)

- Has really complex logic, making it hard to change without braking anything. In such situations, tests can act like a guiding lighthouse, if everything is green, then proceed.

If you're interested in learning more about testing in-general, checkout my article series on this topic: